By Excellence First Enterprise Consultancy (EFEC).

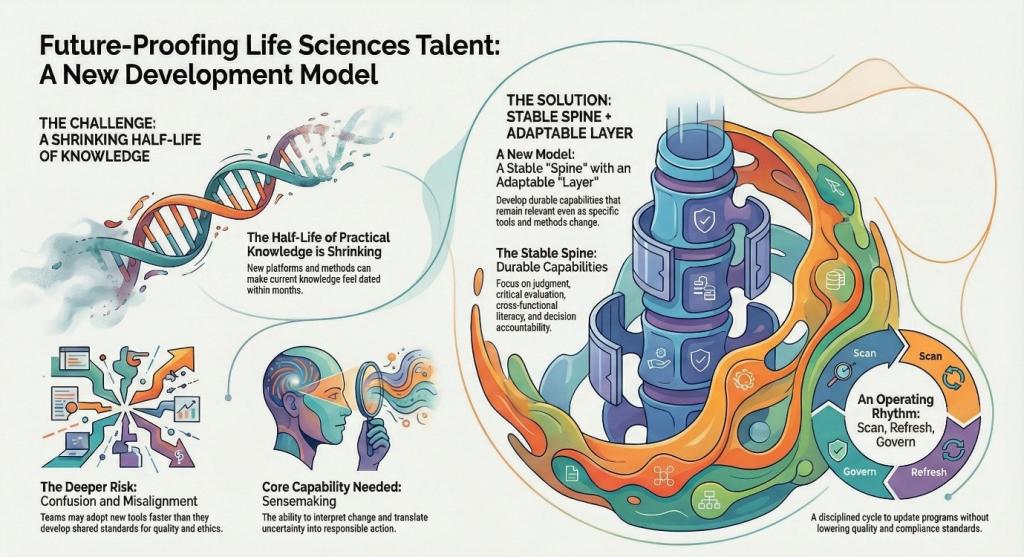

As tools and expectations evolve faster, life sciences talent development becomes a time-dynamic challenge. This article proposes a simple model: a stable spine of durable capabilities, refreshed through scan–refresh–govern.

The life sciences sector is entering a period where the “half-life” of practical knowledge is shortening. New modalities, platforms, regulatory expectations, data ecosystems, and AI-enabled workflows are evolving quickly enough that what feels current today can look dated within months.

For employers, educators, and ecosystem partners, this is no longer just a content challenge (“what should people learn?”). It is a time-dynamic challenge: how do we develop talent that can interpret change accurately, make responsible decisions, and adapt—without losing scientific and operational rigour?

The deeper challenge: not just new tools, but durable judgement under acceleration

In fast-moving conditions, the greatest risk is not simply that training materials become outdated. The deeper risk is confusion and misalignment: teams may adopt new methods faster than they can develop shared standards for quality, ethics, compliance, and accountability.

In life sciences, that risk is amplified because decisions sit alongside patient impact, regulatory scrutiny, IP sensitivity, and long evidence cycles. Practical questions surface repeatedly:

- What has actually changed in our domain—and what remains stable?

- Where are the boundaries and risks (quality, bias, privacy, reproducibility, safety, accountability)?

- What is an appropriate use of emerging methods in this context—and what is not?

- How should decisions be documented and justified when tools and practices keep shifting?

From a talent perspective, this points to a capability that often sits underneath technical skills: sensemaking—the disciplined practice of interpreting change, separating signal from noise, and translating uncertainty into responsible action. In practice, sensemaking means clarifying what new information does (and does not) imply so teams can align and act responsibly (sometimes described as “managing meaning”).

A talent development approach: stable spine, adaptable applied layer

Within the EFEC UK–China Life Sciences Innovation Hub, one working hypothesis is simple: talent development needs a stable “spine” of durable capabilities, with an applied layer that can evolve without destabilising standards.

A simple model for life sciences talent development: a stable spine of durable capabilities, refreshed through an operating rhythm (scan, refresh, govern) as methods and tools evolve.

The stable spine emphasises capabilities that remain relevant even as tools change, such as:

- scientific and ethical judgement (including decision accountability)

- critical evaluation of evidence, outputs, and claims (reproducibility, validation, limitations)

- domain-grounded application (how new methods change real workflows in R&D, clinical, manufacturing, and commercial functions)

- cross-functional literacy (data, regulation, quality systems, and practical constraints)

- reflective practice: communicating uncertainty and learning responsibly over time

The applied layer—examples, workflows, case studies, tool stacks, and current practice signals—can then be refreshed periodically, so programmes remain relevant without turning learning into a constant chase for novelty.

An operating rhythm designed for shorter cycles (without lowering the bar)

A practical implication is that talent programmes may need a reliable renewal loop—not frequent reinvention, but steady refresh with explicit governance. One simple rhythm might look like:

- Scan (lightweight): review meaningful shifts (adoption signals, regulatory directions, methodological advances), without chasing headlines.

- Refresh (controlled): update casework, examples, and assessments at defined intervals, without moving the goalposts mid-cohort.

- Govern (credible): maintain clear sign-off, documentation, and quality control so relevance increases without eroding standards.

This type of rhythm is especially important in life sciences because credibility is cumulative: once confidence is lost (in data quality, compliance, or validation discipline), it is difficult to recover.

Making sensemaking visible: from “knowing” to “showing judgement”

If sensemaking is a core capability, it should show up in what learners produce—not only what they can recall. One practical method is a recurring learner artefact such as a Change Log + Decision Rationale, where participants demonstrate:

- what changed in the landscape (and what did not)

- why it matters for their specific context

- what decision they made (or would recommend)

- what risks and constraints they considered (quality, privacy, safety, regulatory, operational)

- how they would monitor outcomes and revise decisions over time

In life sciences settings, this is often closer to the real work than a “tool proficiency” checklist. It also supports a culture of traceability and accountability, which matters across research, clinical translation, and regulated environments.

Where this perspective is coming from

The EFEC UK–China Life Sciences Innovation Hub is exploring how ecosystem partners can support talent development that is both future-ready and credible—anchored in UK priorities and standards, while staying open to international collaboration where it adds practical value.

We share this perspective as a scene-setter for ongoing conversations: as the pace of change accelerates, talent development needs to move faster—but also remain steady, governed, and evidence-led.

Invitation to dialogue

If you are working on life sciences capability building—within a company, university, accelerator, NHS-adjacent setting, or ecosystem network—we welcome shared learning on what is working. The question is no longer whether change will reshape skills demand, but how we build talent systems that remain trustworthy as conditions keep shifting.

Originally published on Cambridge Network, 8 December 2025.

Disclaimer: This article reflects EFEC’s independent perspective and is shared for dialogue. It does not represent the official positions of any partner institutions.